Rivian Plans to Introduce Lidar in 2026, Claims Tesla's Camera System Is Insufficient

The most significant car news and reviews, straightforward and honest.

Our complimentary daily newsletter delivers the important stories directly to you every weekday.

Rivian has made a bold statement, suggesting that Tesla is mistaken in its belief that self-driving cars can be created using only cameras.

At the automaker’s AI and Autonomy Day in Palo Alto, California, Rivian introduced its proprietary silicon, outlined a roadmap for its next-generation hands-free driver-assist system, presented an AI-driven assistant for text messaging, and announced the integration of LiDAR hardware.

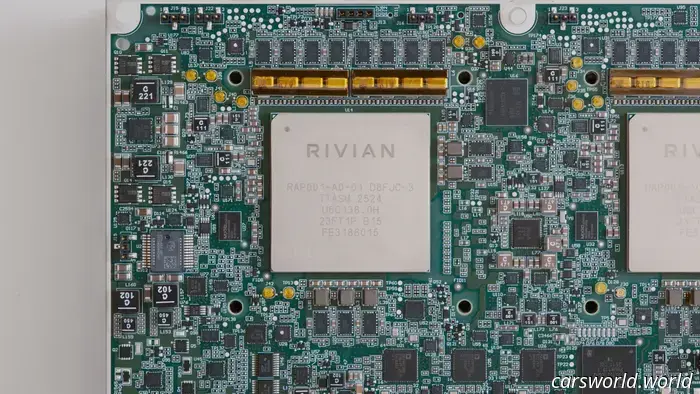

The centerpiece of this announcement is Rivian’s newly designed silicon chip, known as the Rivian Autonomy Processor (RAP1), which will replace the current Nvidia chip. This 5nm chip consolidates processing and memory into a single module and works in conjunction with the automaker’s Gen 3 Autonomy Computer (ACM3), evolving from the ACM2 that was launched in 2025 with the second-generation R1. While RAP1 is crucial for Rivian’s self-driving goals, the inclusion of lidar hardware indicates that Rivian believes the approach of its competitors is fundamentally flawed.

LiDAR will first be featured on the upcoming R2 model, set to launch at the end of 2026. The company did not disclose when or if lidar will be integrated into the R1 models, but it’s difficult to imagine that the more premium flagship models will not adopt this technology shortly after the new R2 is released.

Rivian's soon-to-launch Universal Hands-Free (UHF) hands-free driver-assist system will be available later this month on second-generation R1 models via a free over-the-air software update named 2025.46. This system will evolve into a more sophisticated version with the addition of lidar. The lidar hardware will provide three-dimensional spatial data, redundant sensing, and enhanced real-time detection for complex driving scenarios that the current in-house developed RTK GNSS system—utilizing GPS and ground station signals to accurately pinpoint the vehicle’s location—might not capture effectively. This system employs 10 external cameras, 12 ultrasonic sensors, 5 radar units, and a high-precision GPS receiver.

A spokesperson from Rivian exclusively told The Drive that lidar is essential and that a camera-only approach is insufficient (unlike Tesla’s self-proclaimed Full Self-Driving system, which relies solely on cameras and has removed forward-facing radar) because “Cameras are a passive light source, which means they perform poorly in low-light situations or fog compared to active light sources like lidar.”

According to the spokesperson, lidar can actually enhance visibility at night: “Cameras are effective until they reach their limits,” they stated.

Sam Abuelsamid, vice president of market research at Telemetry, told The Drive that “Utilizing multiple sensing modalities is crucial for developing a reliable and safe automated driving system. Each sensor type has its unique advantages and limitations, and they complement one another. Cameras excel at object classification but struggle in low-light conditions or with direct sunlight. Unless configured for depth perception, they also fall short in accurately gauging the distance to objects.”

“Lidar offers a middle ground in terms of resolution between cameras and radar, functioning effectively in all lighting conditions, and modern software can even handle rain and snow. While it has historically been expensive, recent advancements have significantly reduced solid-state lidar prices, with some new options available for under $200,” Abuelsamid noted.

He further added, “When proponents of camera-only systems argue that humans drive with just two eyes, they overlook several factors. Humans use multiple senses while driving, employing stereoscopic vision for depth perception (which Tesla lacks), along with hearing and touch, interpreting feedback through their hands and body. Human eyes possess a greater dynamic range than cameras, making them much more effective in challenging lighting situations, and the human brain processes information in a distinct manner, allowing for better filtering of extraneous data and improved classification of visual input.”

Rivian shared with The Drive that during its launch, lidar-equipped vehicles will incorporate a new augmented reality display for drivers, along with enhanced object detection, particularly for distant objects and in difficult conditions. The extensive spatial data collected from these vehicles will also aid in improving onboard model performance for all second-generation R1 and later models, including those without lidar. In the long term, vehicles equipped with lidar will gain exclusive autonomous features enabled by this additional sensor and onboard computing capabilities.

Both the lidar and ACM3, coupled with the RAP1 hardware, are currently undergoing validation, and Rivian anticipates delivering this new hardware on the R2 at the end of 2026.

Have insights about future technology? Reach out to us at [email protected]

Other articles

Mazda and Alfa Rank at the Bottom in Car App Satisfaction | Carscoops

Many have reported problems with connectivity, difficult-to-use interfaces, and unreliable remote start systems.

Mazda and Alfa Rank at the Bottom in Car App Satisfaction | Carscoops

Many have reported problems with connectivity, difficult-to-use interfaces, and unreliable remote start systems.

Brabus Didn't Simply Tune This Vehicle, They Erased Its History | Carscoops

The tuning company removed the Bentley name from the coupe, equipping it with 888 horsepower, carbon components, and a significantly higher price.

Brabus Didn't Simply Tune This Vehicle, They Erased Its History | Carscoops

The tuning company removed the Bentley name from the coupe, equipping it with 888 horsepower, carbon components, and a significantly higher price.

Are We Approaching A New Age of Badge Engineering? | Carscoops

Automakers are discreetly replicating SUVs under various names, assuming that the majority of consumers won't realize it as they pursue cost-reduction tactics.

Are We Approaching A New Age of Badge Engineering? | Carscoops

Automakers are discreetly replicating SUVs under various names, assuming that the majority of consumers won't realize it as they pursue cost-reduction tactics.

Here’s Why You Can't Increase the RPMs on the New Honda Prelude

Press the gas pedal while the new Prelude is parked, and there's no response. We inquired with Honda about this.

Here’s Why You Can't Increase the RPMs on the New Honda Prelude

Press the gas pedal while the new Prelude is parked, and there's no response. We inquired with Honda about this.

This Iconic V8 Pontiac Realized the Mid-Engine Dream Well Before the C8 | Carscoops

The well-known DIY Fiero project featuring a 4.6-liter Northstar V8 and significant modifications stands out as an exception to the common advice against purchasing a project car.

This Iconic V8 Pontiac Realized the Mid-Engine Dream Well Before the C8 | Carscoops

The well-known DIY Fiero project featuring a 4.6-liter Northstar V8 and significant modifications stands out as an exception to the common advice against purchasing a project car.

You might think this budget Chinese superbike is Italian, and you wouldn't be completely mistaken. | Carscoops

QJMotor's newest bike, designed in Italy, is expected to be priced lower than its European competitors.

You might think this budget Chinese superbike is Italian, and you wouldn't be completely mistaken. | Carscoops

QJMotor's newest bike, designed in Italy, is expected to be priced lower than its European competitors.

Rivian Plans to Introduce Lidar in 2026, Claims Tesla's Camera System Is Insufficient

"A Rivian spokesperson stated to The Drive, 'Cameras are excellent at observing the world until they're unable to do so.'"